This is the last module of our beginner’s tutorial! My how time flies.

So, what’s the last piece of knowledge?

Rolling updates that allow Deployments updates to take place with no downtime.

How?

By incrementally updating Pods instances with new ones, scheduled on Nodes with available resources.

Before, we just told our application to run multiple instances so we could update and not affect availability.

The default maximum # of pods that can be unavailable during an update is one (1), but you can make that another number or even a percentage of active pods.

Kubernetes updates versions and Deployment updates can be reverted back if it doesn’t work out so nicely.

What if said Deployment is exposed publicly? Then the Service will load balance the traffic to Pods available (aka, not being updated at that exact time)

Rolling updates promote applications from one environment to another by container image updates, or rollback to previous versions, with the continuous integration and delivery of applications - No downtime!

Deployments and Pods - Still 4/4!

kubectl describe pods gives us a lot of information, but somewhere in that infodump tells us that our Pods are running version 1 - We want to update them.

Changed the layout!

Seems like we updated Pods called jocatalin. See what happens next!

Let’s make sure the update is working;

Enjoy stats such as the

- Name (kubernetes-bootcamp)

- The node port (<unset> 31139/TCP)

- The IP and port (10.101.170.210 and <unset> 8080/TCP).

Make an enviroment variable called NODE_PORT with the port assigned.

And there’s our variable with the corresponding number.

curl $(minikube ip):$NODE_PORTHits a different Pod with every request - all Pods are running the latest version.

Let’s also confirm with a rollout status command;

kubectl rollout status deployments/kubernetes-bootcamp

The response we get is essentially “The deployment is out!”

Let’s make sure the update is working;

Enjoy stats such as the name (kubernetes-bootcamp), the node port (<unset> 31139/TCP), and the IP and port (10.101.170.210 and <unset> 8080/TCP),

Make an enviroment variable called NODE_PORT with the port assigned.

And there’s our variable with the corresponding number.

curl $(minikube ip):$NODE_PORT hits a different Pod with every request - all Pods are running the latest version.

Let’s also confirm with a rollout status command;

kubectl rollout status deployments/kubernetes-bootcamp

The response we get is essentially “The deployment is out!”

Perhaps the update isn’t what we wanted, so let’s roll it back.

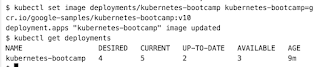

The version is updaated to 10 and let’s see our deployments.

Hmm. One too many. That’s more resources than we need.

Using the describe modifier gives us a lot of information, but in short; There’s no image called v10 in the repository.

So let’s roll it back with the rollout command to go back to v2, and there are four pods once again.

Comments

Post a Comment